Thanks to artificial intelligence (AI), the world is changing fast. But does this mean we lose what makes us human? Martin Heidegger, one of the 20th century’s biggest thinkers, has important ideas about technology and existence that speak volumes in the age of AI. Heidegger argued that it was risky to see everything—including humans—as just resources within a technological system. Let’s examine how his philosophy encourages us to think about AI. Perhaps it even has the power to redefine what it means for us to “be” as automation continues.

Heidegger’s Notion of Being

Heidegger’s idea of “Being,” or Dasein, as explained in his important book Being and Time, is key to understanding human existence. Dasein means the special way people experience the world—and being aware of our own “being.”

We humans are different from objects or animals. We have a conscious mind that always doubts whether we really exist, what our purpose is, and how we fit into the bigger picture around us.

Heidegger argues that if you want to fully understand what technology is (for example) and how it affects us, then first, you have to get to grips with Dasein’s nature. According to Heidegger, technology has the potential to reduce human experience to mere functions of calculation or utility. As such, it may deprive us of meaning or true presence.

With AI—which reproduces some facets of human intelligence—we might ask: does our understanding of Dasein challenge what AI represents? If AI can “think,” is that a problem for how we think about being unique?

As intelligent systems increasingly dominate our world, Heidegger’s investigation into Dasein prompts important questions about what it means “to be.” It also asks how we want to maintain the depth of human existence in light of technological progress.

Technology in Heidegger’s Philosophy

In his essay “The Question Concerning Technology,” Heidegger takes an intriguing look at technology. It’s not just a bunch of tools, he says—it’s a way that reveals (shows us) things in the world and helps us interact with them.

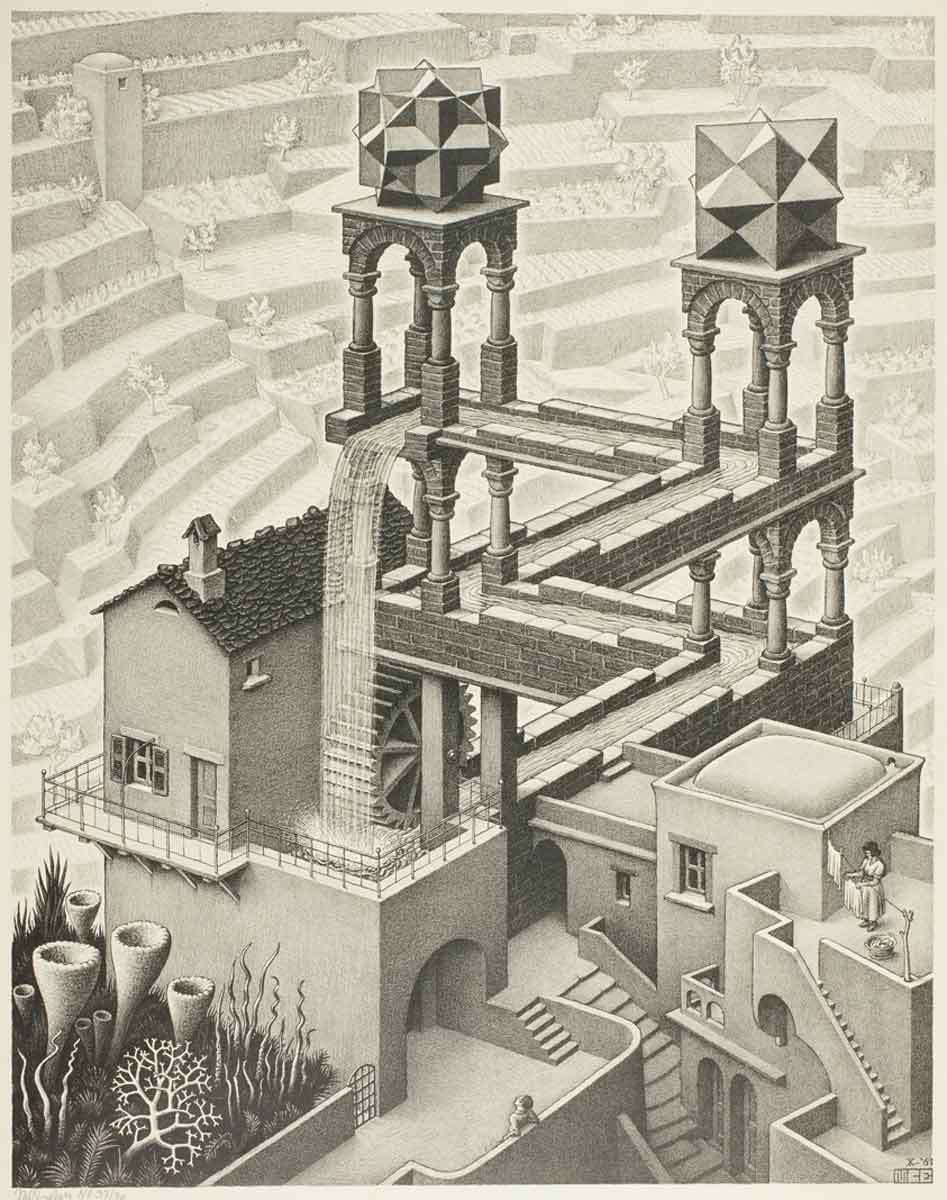

One big idea here is what he calls “Enframing” (Gestell): the way technology puts everything in order and makes it appear to us. According to Heidegger, our gadgets don’t just help us see things. They also make us see (and experience) everything, including ourselves, as stuff we can use however we want.

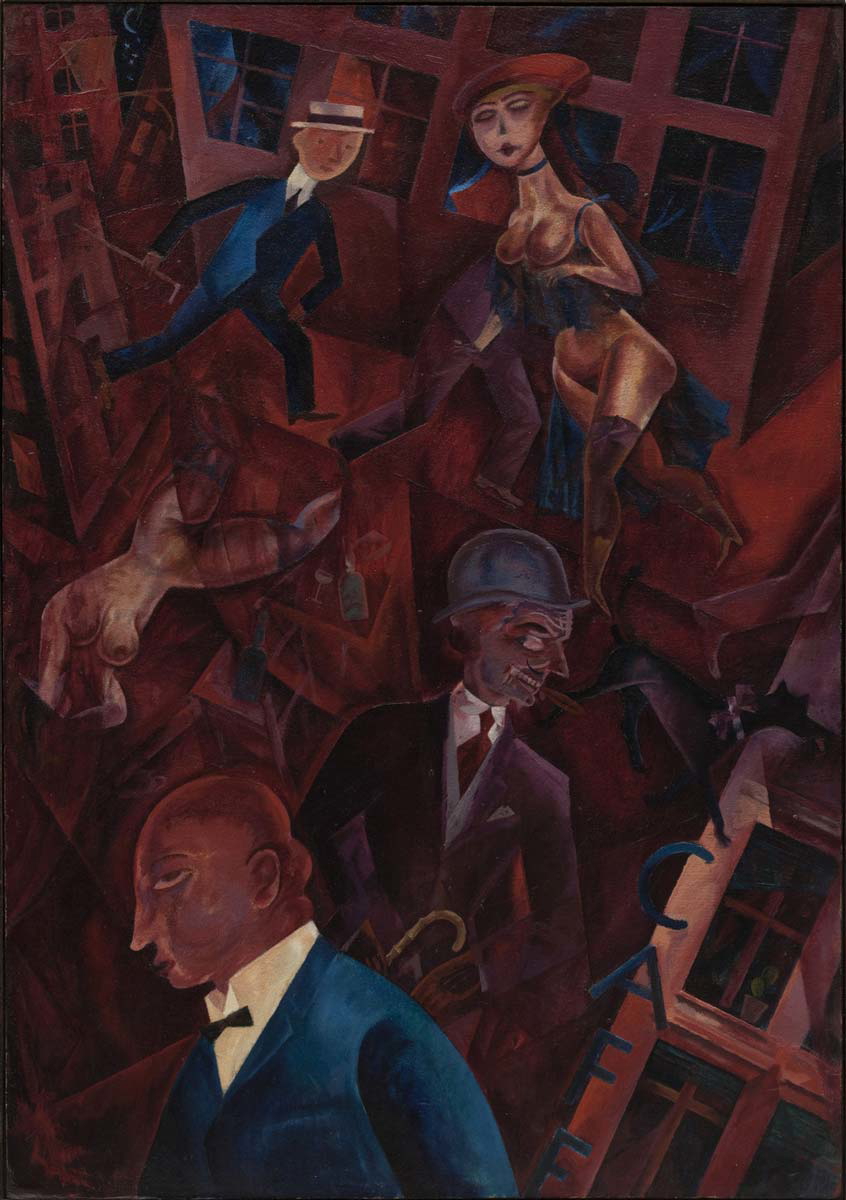

This view is pretty disturbing because if you follow it along, then nothing has value or meaning in itself. Everything just becomes resources waiting to be optimized or used up—like crude oil or coal reserves. And yes—even human beings, too.

AI, as Heidegger understood, exemplifies the essence of Enframing. By operating on information and algorithms, AI systems foster a mindset of calculation that prizes efficiency, foresight, and control.

This calculative thinking can silently co-opt human freedom. We may start to base decisions not on judgment or values but rather on how machines would analyze them. In a world fashioned by AI, human existence could end up being just another optimization factor among many.

Heidegger urges us to recognize this peril. We should probe deeply into our rapport with technology. We need to discover how to live in a way that is mindful and true – one that safeguards our humanity in the face of encroaching logic.

The Essence of AI: Tool or Autonomous Being?

Heidegger’s idea of Zuhandenheit, or “readiness-to-hand,” presents tools as things that blend so seamlessly into our daily activities that they become extensions of ourselves. We don’t even have to think about using them.

When we use a hammer, for example, we don’t see it as an object separate from us. Instead, it seems to “vanish” as we employ it to serve our own ends. However, AI is now making us question whether this understanding means there is always a clear distinction between a tool and an independent entity.

AI, particularly in its more advanced forms, blurs this line. Unlike a traditional tool, AI systems can adapt, learn, and, to some extent, make decisions independent of direct human control.

This raises a profound question within Heidegger’s framework: if AI starts operating beyond its “readiness-to-hand,” does it still just remain a tool, or does it come close to being an entity with its own mode of existence? As AI develops further, it might demand a new ontological category. One that challenges Heidegger’s understanding of Being.

This shift forces us to reconsider what it means for us to live alongside autonomous entities that, while created by us, operate under their own logic. What does it do to human identity, autonomy, and essence when the tools we make begin thinking, acting, and existing in ways we can’t fully predict or control?

AI and the Danger of Enframing

In his philosophy, Heidegger warns against viewing everything as a resource ready for use – an idea known as “standing-reserve.” When applied to a world governed by artificial intelligence (AI), this takes on a sinister tone.

With access to vast amounts of information, AI systems can often reduce complex human identities, emotions, and lived experiences to measurable outputs.

There is a risk we will begin to see people not as valuable beings in their own right but rather as data inputs. Ones that can be scrutinized, improved upon, or manipulated. And it is just another example of standing-reserve.

However, there are significant dangers linked with this shift in perspective. When we start seeing humans purely as inputs within a technological system, their essential uniquenesses, freedoms, and creativities could be compromised.

In a society that becomes centered around AI, there is the risk that attributes such as efficiency, productivity, and foreseeability become so important they hollow out the things we value most about being human.

Heidegger’s warning is rooted in the idea that if everything—including humanity—is approached from the standpoint of usefulness alone, then we lose touch with what it means to exist authentically. Put bluntly, if technology starts deciding our worth, then forget about fulfillment or joy.

To avoid such an existential pitfall, Heidegger wants us to be more conscious when adopting new tools. Think about not only how they might change us but also how using them might redefine concepts at an individual and societal level, which, up until now, seemed set in stone.

Heidegger’s Notion of “Gelassenheit” and Its Relevance to AI

Heidegger’s concept of Gelassenheit, which translates to “releasement,” offers a profound response to the dangers of thinking only in terms of technology. It suggests being open and letting things be – using technology actively but not letting it take over.

Rather than simply saying yes or no to technological progress, Heidegger wants us to understand that it affects us deeply. We should consider more than just whether something is useful. This mindset is important when thinking about artificial intelligence (AI) because AI could undermine human freedom and what we care about.

Applying Gelassenheit to AI means developing and using it wisely and with self-control so that AI serves humanity rather than subverting us.

This will involve establishing principles based on morals and values concerning what we think is genuinely important in life. For instance, valuing liberty or dignity—or overall well-being—over factors such as power alone or efficiency by itself.

We should think carefully as we develop AI: how can we make sure it doesn’t take away from us things that are important as it gives us new abilities? Releasement is about finding a balance here. Using what’s good about AI systems without letting them become all-important.

In sum, this approach reminds us to hold onto aspects of being human, such as critical thinking, compassion, and having real-world connections as intelligent machines become more prevalent.

Future Directions: AI, Heidegger, and the Question of Being

Looking ahead at AI’s future, Heidegger provides an important guide for this complicated terrain. Heidegger challenges us to rethink where AI is going—a reflection on the essence of being and technology’s role in our lives is needed.

His work reminds us that if we’re not careful, progress in AI could mean we understand existence less and have a world run by algorithms—where humans are simply one more data point.

Bringing Heideggerian ideas into AI means ensuring they reflect what humans care about. We want to develop tech that enables rather than limits who we really are—technology that respects how dignity, freedom, and creativity manifest differently for each person. The aim is to foster an environment with AI that enriches our humanity rather than impoverishes it.

As we strive to create AI that benefits humanity, Heidegger’s ideas urge us to think deeply about how our innovations affect us. We can use his concept of Gelassenheit—letting things be—to help shape AI so it doesn’t control or consume us completely (Enframing).

Ultimately, Heidegger wants us to ask: how can we make sure future generations have more meaningful lives even though they might have robot helpers?

So, What Does Heidegger Say About AI?

Heidegger has important things to say about AI and how we should respond to it as it develops. He thinks deeply about what it means to be human in a world where AI might know more and more about us – even possibly everything. He worries that if we’re not careful, technology could come to define who we are.

But according to Heidegger, there’s also something we can do about it: a kind of letting called Gelassenheit. We should use technology mindfully so that we remain in charge. As we shape AI—and as AI shapes us—we need to think hard about this advice from Heidegger.

We still have lots of questions, too: How can Heidegger’s ideas help us build ethical AI that makes life better for humans? And does his philosophy give us any clues about how to deal with machines that don’t just seem smart but might also be conscious?