At present, it isn’t easy to find a topic more relevant and rapidly developing than the philosophy of artificial intelligence. Philosophers have been around much longer than computers, and are trying to solve some of the questions about AI which have arisen lately. How does the mind work? Is it possible for machines to act intelligently like humans, and if so, do they have real, “conscious” minds? What are the ethical implications of intelligent machines? And finally, is it possible to create true artificial intelligence?

What is Artificial Intelligence?

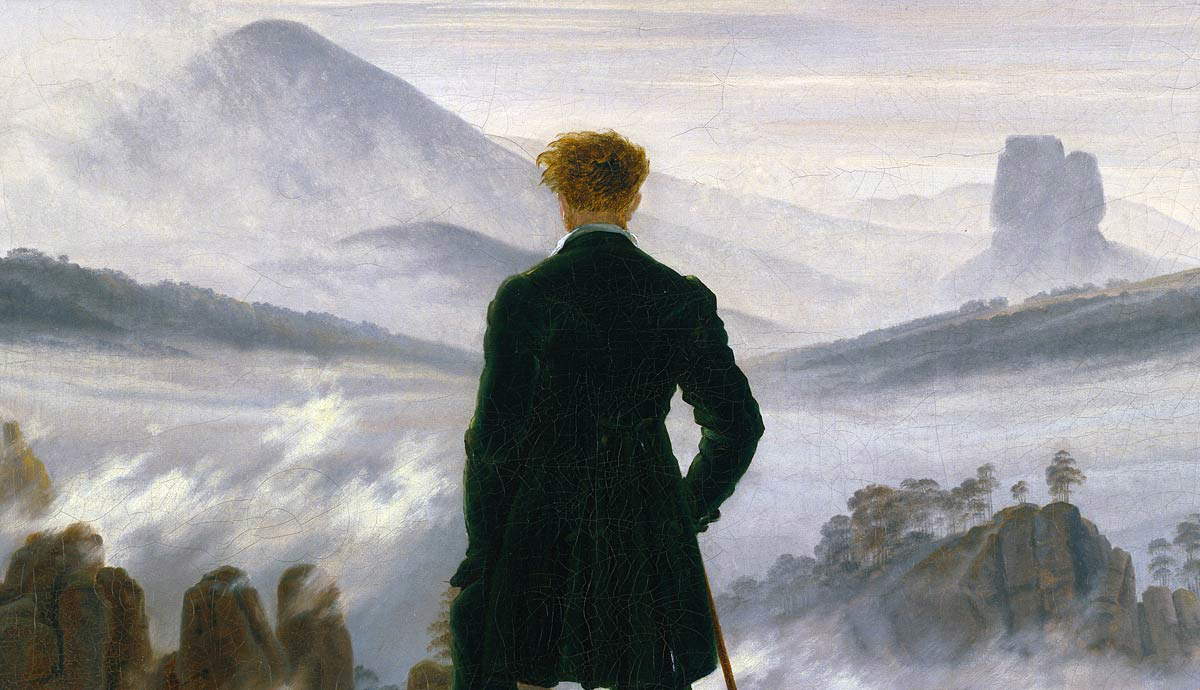

From Descartes to Turing, the evolution of modern philosophy’s perception of artificial intelligence has been highlighted by conflicting opinions and outlooks.

If your phone beats you at chess, it might make you think that artificial intelligence is preparing to take over the world. However, what is artificial intelligence really, and what types of AI exist? What are the latest developments in this area? Should we fear or hope for a future with AI?

Artificial intelligence is the ability of digital computers and robots controlled by them to perform tasks that were previously the prerogative of humans.

Modern projects for the development of artificial intelligence systems replicate intellectual processes characteristic of human thinking, such as reasoning, generalization, experience, and data analysis.

Artificial intelligence in the contemporary world is a huge range of algorithms and mechanized learning tools that allow you to get data, identify patterns, and optimize processes quickly.

The term “artificial intelligence” was coined in 1956 by John McCarthy at the first-ever AI conference at Dartmouth College. AI systems work by using large amounts of data that are analyzed for patterns and correlations and then used to predict events.

AI programming focuses on three core skills: learning, reasoning, and self-correction. AI systems can be used to create chatbots that generate text, as well as image and voice recognition, machine vision, natural language processing, robotics, and self-driving cars.

How Did Artificial Intelligence Originate?

The basic idea of artificial intelligence (AI) has been around since ancient times, but it wasn’t until the 1950s that it got its name. AIs are intelligent machines that can act and think like humans, or even better than humans. In the decades since, AI has become increasingly powerful as various methods have been developed to improve its effectiveness.

In the 1950s, a group of scientists at Dartmouth College in New Hampshire decided to explore the possibilities of AI. They developed the LISP programming language, which allowed for more flexibility in machine learning, i.e., created the ability of computers to learn from “experience” and data.

This was a critical step towards building machines with something resembling true intelligence. They were able to make their machines “learn” by rewarding them when they got the right answers and punishing them when they got the wrong answer.

Significant progress was made over the next decade, and in 1961 the British scientist Joseph Weizenbaum wrote Eliza, the first conversational computer program. Then, subsequent programs laid the foundations of modern technology.

These included game programs, such as a checkers-playing machine, which could play at the level of a grandmaster. Additionally, scientists created systems based on vision, natural language processing, and robotic systems designed to mimic human behavior in a physical environment (for example, in factories).

In the 1970s, more significant advances were made. Expert systems have been developed that can make decisions in complex areas such as medical diagnostics or legal disputes.

In addition, neural networks emerged. These simulated parts of the brain in software form and were used to create advanced algorithms designed specifically for robotic applications such as self-driving cars or uncrewed aerial vehicles (drones).

The 1980s and 1990s and beyond saw a boom in technology that led to a plethora of new AI applications, from virtual agents able to communicate with customers over the phone or online chats and automated stock trading to products such as Apple’s Siri, which understands users requests in natural language using voice commands.

Today, artificial intelligence is being used more and more in many areas—from healthcare, where it makes diagnoses based on thousands of images and medical records, to finance, where banks use predictive analytics and even help traders/investors make better decisions faster than ever before.

However, what does philosophy have to the development of artificial intelligence?

René Descartes’ Philosophical Justifications for the Existence of Artificial Intelligence

René Descartes, one of the most influential philosophers in the modern era, has been credited with groundbreaking contributions to philosophical thought, including his views on artificial intelligence. In his famous work, Meditations on First Philosophy, Descartes laid down a foundation for the possibility of having machines that act as if they had minds and consciousness like humans.

Descartes began by positing that human beings are composed of two distinct substances—the physical body and the immaterial mind (or soul). He argued that while physical bodies can be examined objectively, thoughts and mental states can only be known through introspection. This idea is often summarized as “cogito ergo sum” or “I think therefore I am.”

He further argued that since thinking is essential to an individual’s existence, it must necessarily be possible for a being to think without possessing a physical body. This led him to believe in the possibility of creating a thinking machine that could operate much like a human being.

For Descartes, the key factor in determining whether something was capable of thinking was language processing. He believed that if we could create a machine capable of understanding and using language fluently, then it would demonstrate evidence of true intelligence.

In other words, he saw language processing as an essential marker for consciousness, reasoning that there must be some kind of inner capacity within such a machine that holds concepts and thoughts through languages like us humans. Descartes’ contributions and justifications set up an important conceptual foundation for artificial intelligence.

Can Machines Think?

“Can machines think?”—this important question was raised in 1950 by the British mathematician and logician Alan Turing. He emphasizes that the traditional approach to this issue is first to define the concepts of “machine” and “intelligence.”

Turing, however, took a different path; instead, he replaced the original question (which he found to be nearly impossible to answer) with another “which is closely related to the original and is relatively unambiguous.” Essentially, he proposed to replace the question “Can machines think?” with the simpler question “Can machines do what we (as thinking creatures) can do?”

A computer can be considered intelligent if it can make us believe that we are not dealing with a machine but with a person. The advantage of the new question, according to Turing, is that it draws a clear line between the physical and intellectual capabilities of a person, for which Turing offers an empirical test.

The essence of an actual, practical Turing test is as follows. A judge, a man, and a machine are all put in different rooms. The judge is in correspondence with both the man and the machine, not knowing in advance which of the interlocutors is a man and which is a machine. The response time to a question is fixed so that the judge cannot determine the machine by this feature (in Turing’s time, machines were slower than humans, but now they react faster and this limit is not important anymore).

If the judge cannot determine which of his interlocutors is a machine, then the machine has passed the Turing test and can be considered thinking. Moreover, the machine will not just be a semblance of the human mind—it will simply count as a mind. We will have no way of distinguishing its behavior from human behavior. This interpretation of artificial intelligence as a full-fledged equivalent of natural human intelligence has been called “strong AI.”

Let’s pay attention to the fact that the Turing test does not mean at all that the machine should “understand” the essence of the words and expressions with which it operates. The machine only needs to successfully imitate meaningful responses to be considered truly intelligent.

A Criticism of the Turing Test: Searle’s Chinese Room Experiment

In 1980, John Rogers Searle, an American philosopher, proposed a thought experiment that criticized the Turing test and the notion that an intelligent mind can exist without understanding.

The essence of the Chinese room experiment is as follows. A scientist places a men in a room in which baskets full of Chinese characters are placed.

The man is given a textbook, in English, which provides rules for combining Chinese characters. The man can easily apply these rules, even though he does not speak Chinese; he can rely on the shape of the symbols described in the textbook without understanding the meaning of the symbols.

Searle imagines that people outside the room who understand Chinese are transmitting character sets into the room and that, in response, the man inside opens the textbook, manipulates the characters according to the rules, and feeds back a set of characters through the door.

Thus, the man in the room passes a kind of Turing test for knowledge of the Chinese language, which he actually does not know. Searle shows that the Turing test is not a criterion for the presence of consciousness at all, but only a criterion for the ability to successfully manipulating symbols.

The essence of Searle’s position on the issue of artificial intelligence is as follows: the mind operates with semantic content (semantics) or meaning, while a computer program is completely determined by its syntactic structure (the form of the symbols it operates with). Therefore, passing the Turing test does not imply the presence of a mind.

What is the Modern Take on the Philosophy of Artificial Intelligence?

The modern philosophical perception of artificial intelligence has evolved significantly since Descartes and Turing. Today, many philosophers recognize that machines can simulate aspects of human cognition and decision-making, but they are less convinced that machines are truly conscious or possess a mind in the same sense as humans.

One important aspect of contemporary philosophy concerned with AI is its reliance on cognitive science. Cognitive scientists view the mind as a computational system and have therefore sought to understand how algorithms and programs could mimic the functioning of a human mind.

However, they tend to be cautious about the extent to which artificial systems can really “understand” meaning or truly “reason” about information in the way humans do.

There is also an area within philosophy called philosophy of mind that deals with questions about consciousness and whether it arises from computational processes. Some philosophers argue that consciousness emerges from complex computations, whereas others claim consciousness cannot be mechanized at all.

Another approach towards AI in philosophy comes from ethics. Philosophers are keen to explore what ethical issues arise when developing AI technologies, such as where responsibility for machine actions is located (who bears the blame for errors) or the responsibility for a machine’s behavior as compared with human behavior.

While skepticism remains to some extent around true intelligence being possible through computational mechanics alone, most philosophers do acknowledge that AI systems can achieve remarkable feats, particularly when experts build them with complex decision-making components resembling human reasoning processes.